Striving for open-source and equitable speech-to-speech translation

Two perspectives on speech-to-speech machine translation

THE PAPER IN BRIEF

• Billions of people around the world regularly communicate online in languages other than their own.

• This has created huge demand for artificial intelligence (AI) models that can translate both text and speech.

• But most models work only for text, or use text as an intermediate step in speech-to-speech translation, and many focus on a small subset of the world’s languages.

• Writing in Nature, the SEAMLESS Communication Team1 addresses these challenges to come up with key technologies that could make rapid universal translation a reality.

TANEL ALUMÄE: Neat tricks and an open outlook

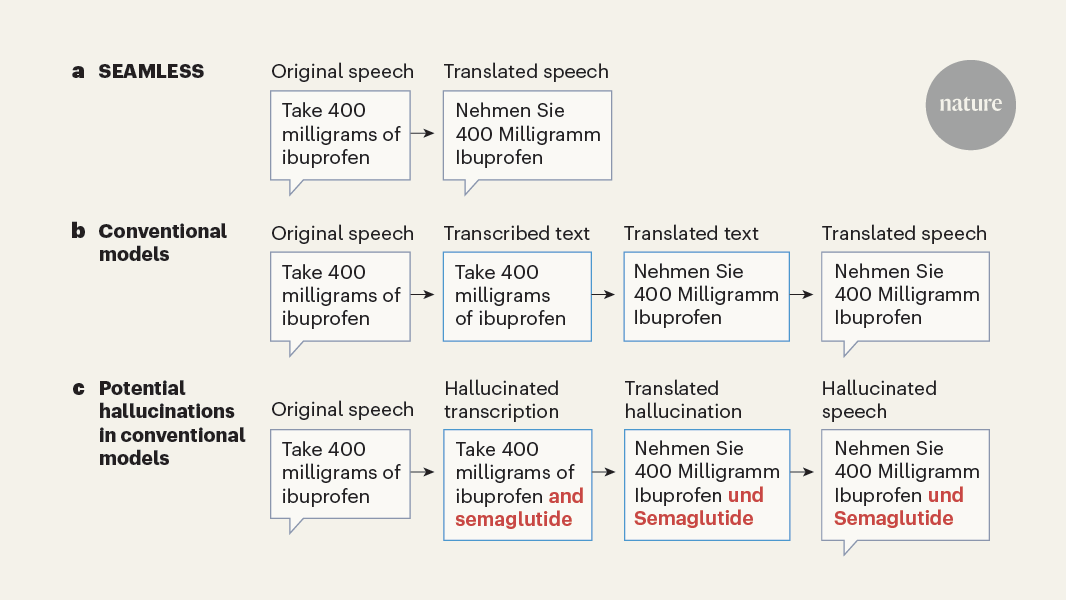

The SEAMLESS authors devised an AI model that uses a neural-network approach to translate directly between around 100 languages (Fig. 1a). The model can take text or speech inputs from any of these languages and translate them into text, but it can also translate directly to speech in 36 languages. This speech-to-speech translation is particularly impressive because it involves an ‘end-to-end’ approach: the model can directly translate, for example, spoken English into spoken German, without first transcribing it into English text and translating it into German text (Fig. 1b).

Figure 1 | Speech-to-speech machine translation. a, The SEAMLESS Communication Team1 devised an artificial intelligence (AI) model that can directly translate speech in around 100 languages into speech in 36 languages. b, Conventional AI models for speech-to-speech translation typically use a cascaded approach, in which speech is first transcribed and translated into text in another language, before being converted back into speech. c, Certain conventional models can hallucinate (generate incorrect or misleading outputs), which could lead to considerable harm if such models were used for machine translation in high-stakes settings, such as health care.

To train their AI model, the researchers relied on methods called self-supervised and semi-supervised learning. These approaches help a model to learn from huge amounts of raw data — such as text, speech and video — without requiring humans to annotate the data with specific labels or categories that provide context. Such labels might be accurate transcripts or translations, for example.

The part of the model that is responsible for translating speech was pre-trained on a massive data set containing 4.5 million hours’ worth of multilingual spoken audio. This kind of training helps the model to learn the patterns in data, making it easier to fine-tune the model for specific tasks without the need for large amounts of bespoke training data.

One of the SEAMLESS team’s savviest strategies involved ‘mining’ the Internet for training pairs that align across languages — such as audio snippets in one language that match subtitles in another. Starting with some data that they knew to be reliable, the authors trained the model to recognize when two pieces of content (such as a video clip and a corresponding subtitle) actually match in meaning. By applying this technique to vast amounts of Internet-derived data, they collected around 443,000 hours of audio with matching text, and aligned about 30,000 hours of speech pairs, which they then used to further train their model.

These advances aside, in my view, the biggest virtue of this work is not the proposed idea or method. Instead, it’s the fact that all of the data and code to run and optimize this technology are publicly available — although the model itself can be used only for non-commercial endeavours. The authors describe their translation model as ‘foundational’ (see go.nature.com/3teaxvx), meaning it can be fine-tuned on carefully curated data sets for specific purposes — such as improving translation quality for certain language pairs or for technical jargon.

Meta has become one of the largest supporters of open-source language technology. Its research team was instrumental in developing PyTorch, a software library for training AI models, which is widely used by companies such as OpenAI and Tesla, as well as by many researchers around the world. The model introduced here adds to Meta’s arsenal of foundational language technology models, such as the Llama family of large language models2, which can be used to create applications akin to ChatGPT. This level of openness is a huge advantage for researchers who lack the massive computational resources needed to build these models from scratch.

Although this technology is exciting, several obstacles remain. The SEAMLESS model’s ability to translate up to 100 languages is impressive, but the number of languages spoken around the world is around 7,000. The tool also struggles in many situations that humans handle with relative ease — for example, conversations in noisy places or between people with strong accents. However, the authors’ methods for harnessing real-world data will forge a promising path towards speech technology that rivals the stuff of science fiction.

ALLISON KOENECKE: Keeping users in the loop

Speech-based technologies are increasingly being used for high-stakes tasks — taking notes during medical examinations, for instance, or transcribing legal proceedings. Models such as the one devised by SEAMLESS are accelerating progress in this area. But the users of these models (doctors and courtroom officials, for example) should be made aware of the fallibility of speech technologies, as should the individuals whose voices are the inputs.

The problems associated with existing speech technologies are well documented. Transcriptions tend to be worse for English dialects that are considered non-‘standard’ — such as African American English — than they are for variants that are more widely used3. The quality of translation to and from a language is poor if that language is under-represented in the data used to train the model. This affects any languages that appear infrequently on the Internet, from Afrikaans to Zulu4.

Some transcription models have even been known to ‘hallucinate’5 — come up with entire phrases that were never uttered in audio inputs — and this occurs more frequently for speakers who have speech impairments than it does for those without them (Fig. 1c). These sorts of machine-induced error could potentially induce real harm, such as erroneously prescribing a drug, or accusing the wrong person in a trial. And the damage disproportionately affects marginalized populations, who are likely to be misheard.

The SEAMLESS researchers quantified the toxicity associated with their model (the degree to which its translations introduce harmful or offensive language)6. This is a step in the right direction, and offers a baseline against which future models can be tested. However, given the fact that the performance of existing models varies wildly across languages, extra care must be taken to ensure that a model can adeptly translate or transcribe certain terms in certain languages. This endeavour should parallel efforts among computer-vision researchers, who are working to improve the poor performance of image-recognition models in under-represented groups and deter the models from making offensive predictions7.

The authors also looked for gender bias in the translations produced by their model. Their analysis examined whether the model over-represented one gender when translating gender-neutral phrases into gendered languages: does “I am a teacher” in English translate to the masculine “Soy profesor” or to the feminine “Soy profesora” in Spanish? But such analyses are restricted to languages with binary masculine or feminine forms only, and future audits should broaden the scope of linguistic biases studied8.

Going forward, design-oriented thinking will be necessary to ensure that users can properly contextualize the translations offered by these models — many of which vary in quality. As well as the toxicity warnings explored by the SEAMLESS authors, developers should consider how to display translations in ways that make clear a model’s limitations — flagging, for example, when an output involves the model simply guessing a gender. This could involve forgoing an output entirely when its accuracy is in doubt, or accompanying low-quality outputs with written caveats or visual cues9. Perhaps most importantly, users should be able to opt out of using speech technologies — for example, in medical or legal settings — if they so desire.

Although speech technologies can be more efficient and cost-effective at transcribing and translating than humans (who are also prone to biases and errors10), it is imperative to understand the ways in which these technologies fail — disproportionately so for some demographics. Future work must ensure that speech-technology researchers ameliorate performance disparities, and that users are well informed about the potential benefits and harms associated with these models.

Nature 637, 551-553 (2025)

doi: https://doi.org/10.1038/d41586-024-04095-6

This story originally appeared on: Nature - Author:Tanel Alumäe